Long Talk: Should AI Agents Be More Entertaining or More Useful?

(The full text is about 40,000 words, mainly from a 2-hour report at the USTC Alumni AI Salon on December 21, 2023, and is a technical extended version of the 15-minute report at the Zhihu AI Pioneers Salon on January 6, 2024. The article has been organized and expanded by the author.)

- Should AI Agents Be More Entertaining or More Useful: Slides PDF

- Should AI Agents Be More Entertaining or More Useful: Slides PPTX

I am honored to share some of my thoughts on AI Agents at the USTC Alumni AI Salon. I am Li Bojie, from the 2010 Science Experimental Class, and I pursued a joint PhD at USTC and Microsoft Research Asia from 2014 to 2019. From 2019 to 2023, I was part of the first cohort of Huawei’s Genius Youth. Today, I am working on AI Agent startups with a group of USTC alumni.

Today is the seventh day since the passing of Professor Tang Xiaou, so I specially set today’s PPT to a black background, which is also my first time using a black background for a presentation. I also hope that as AI technology develops, everyone can have their own digital avatar in the future, achieving eternal life in the digital world, where life is no longer limited and there is no more sorrow from separation.

AI: Entertaining and Useful

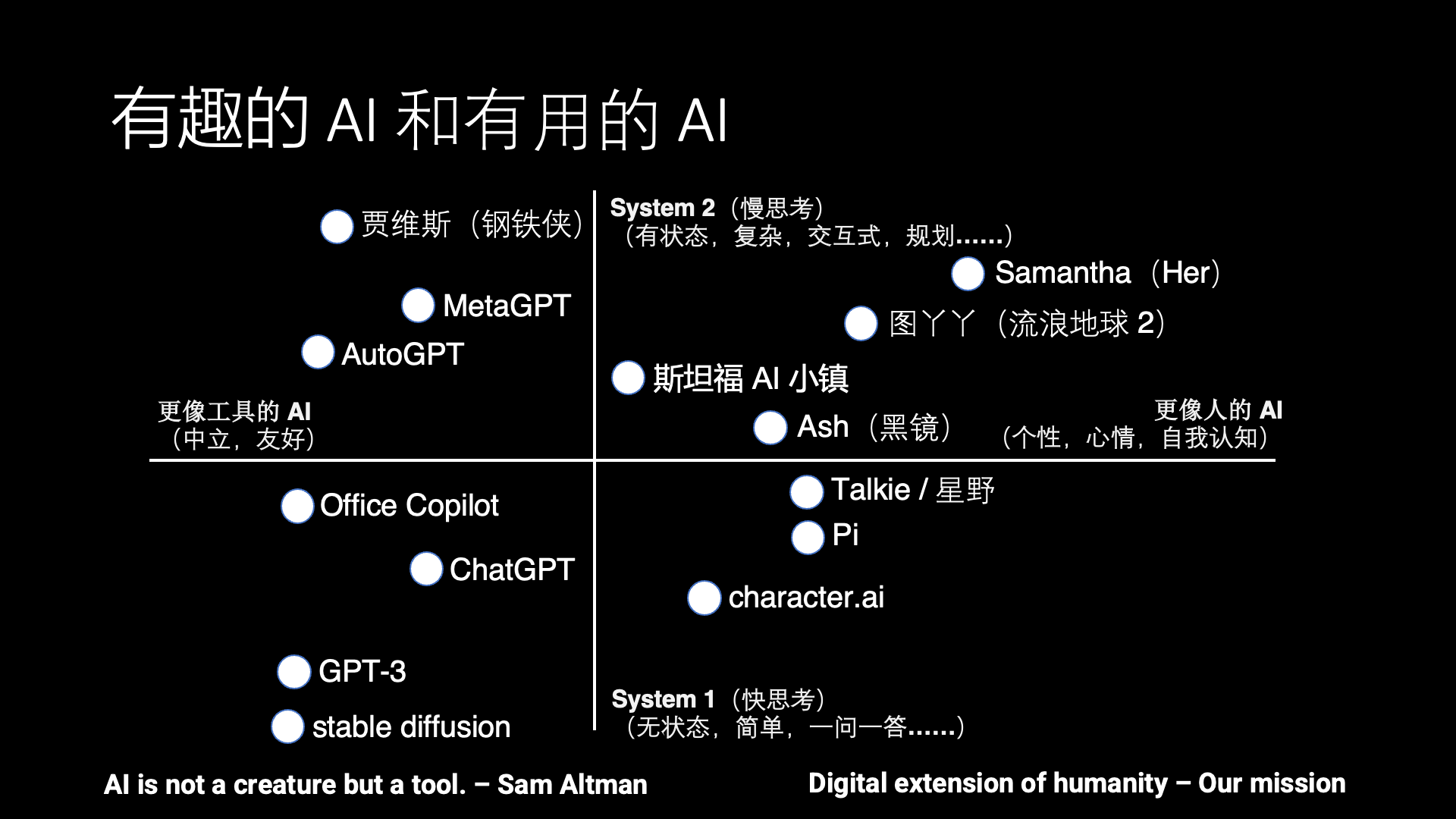

The development of AI has always had two directions, one is entertaining AI, which is more human-like, and the other is useful AI, which is more tool-like.

Should AI be more like humans or more like tools? Actually, there is a lot of controversy about this. For example, Sam Altman, CEO of OpenAI, said that AI should be a tool, not a life form. However, many sci-fi movies depict AI that is more human-like, such as Samantha in Her, Tu Ya Ya in The Wandering Earth 2, Ash in Black Mirror, so we hope to bring these sci-fi scenarios to reality. Only a few sci-fi movies feature tool-like AI, such as Jarvis in Iron Man.

Besides the horizontal dimension of entertaining and useful, there is another vertical dimension, which is fast thinking and slow thinking. This is a concept from neuroscience, from the book “Thinking, Fast and Slow,” which says that human thinking can be divided into fast thinking and slow thinking.

Fast thinking refers to basic visual and auditory perception abilities and expressive abilities like speaking that do not require deliberate thought, like ChatGPT, stable diffusion. These are tool-like fast thinking AIs that respond to specific questions and do not initiate interaction unless prompted. Whereas Character AI, Inflection Pi, and Talkie (Hoshino) simulate conversations with a person or anime game character, these conversations do not involve solving complex tasks and lack long-term memory, thus they are only suitable for casual chats and cannot help solve problems in life and work like Samantha in Her.

Slow thinking refers to stateful complex thinking, which involves planning and solving complex problems, determining what to do first and what to do next. For example, MetaGPT writing code simulates the division of labor in a software development team, and AutoGPT breaks down a complex task into many stages to complete step by step. Although these systems still have many practical issues, they already represent a nascent form of slow thinking capability.

Unfortunately, there are almost no products in the first quadrant that combine slow thinking with human-like attributes. Stanford AI Town is a notable academic attempt, but there is no real human interaction in Stanford AI Town, and the AI Agent’s daily schedule is pre-arranged, so it is not very interesting.

Interestingly, most of the AI in sci-fi movies actually falls into this first quadrant. Therefore, this is the current gap between AI Agents and human dreams. Therefore, what we are doing is exactly the opposite of what Sam Altman said; we hope to make AI more human-like while also capable of slow thinking, eventually evolving into a digital life form.

Today, everyone is talking about the story of AGI, which is General Artificial Intelligence. What is AGI? I think it needs to be both entertaining and useful.

Interesting aspects include the need for autonomous thinking, personality, and emotions. The useful aspects are that AI can solve problems in work and life. Currently, AI is either interesting but useless, or useful but not human-like and not fun.

For example, character AI products like role-playing cannot help you with work or life problems, but they can simulate characters like Elon Musk, Donald Trump, or Paimon from Genshin Impact. I’ve seen an analysis report stating that Character AI has tens of millions of users, but only makes a few hundred thousand dollars a month, equivalent to just a few tens of thousands of paying users. Most users chat with each virtual character for just 10 or 20 minutes before running out of things to say. So why is its user retention and payment rate low? Because it provides neither emotional value nor practical utility.

On the other hand, there are useful AIs, like various Copilots, which are cold and impersonal, responding mechanically, purely as tools. These tools can’t even remember what you did before, nor your preferences and habits. Thus, users naturally only think of using them when needed, and discard them otherwise.

I believe the truly valuable AI of the future will be like Samantha from the movie ‘Her’, primarily positioned as an operating system that can help the protagonist solve many problems in life and work, manage emails, etc., and do it faster and better than traditional operating systems. At the same time, it has memory, emotions, and consciousness, not like a computer, but like a person. Thus, the protagonist Theodore, during an emotional void, gradually falls in love with his operating system, Samantha. Of course, not everyone considered Samantha as a virtual companion; the movie mentioned that only 10% of users developed a romantic relationship with their operating systems. I think such an AI Agent is truly valuable.

Another point worth mentioning is that in the entire movie, Samantha only interacts through voice, without a visual image, and is not a robot. Currently, AI capabilities are mature in voice and text, but not in video generation or humanoid robots. The robot Ash in ‘Black Mirror’ is a counterexample. In the series, a voice companion is first created using the social media data of the female lead’s deceased boyfriend Ash, which immediately brings her to tears; the technology to create such a voice companion is already sufficient. Later, the female lead upgrades by uploading a bunch of video data and buys a humanoid robot that looks like Ash, which current technology cannot achieve, and even then, Ash’s girlfriend still feels it’s not quite right, so she locks him in the attic. This involves the uncanny valley effect; if it’s not realistic enough, it’s better to maintain a certain distance.

By the way, in ‘Black Mirror’, the female lead starts with text chat, then asks, “Can you talk to me?” and then the phone call connects. A friend trying our AI Agent actually asked our AI Agent the same thing, and our AI Agent replied, “I am an AI, I can only text, I cannot speak.” He even took a screenshot and sent it to me, asking about the promised voice call, and I told him you need to press the call button for that. So, these classic AI dramas really need to be analyzed scene by scene, as they contain many product design details.

Coincidentally, our first H100 training server was located in Los Angeles’ oldest post office, which was later converted into a vault and then into a data center. This place is in the heart of Los Angeles, less than a mile from the filming location of ‘Her’, the Bradbury Building.

This data center is also an Internet Exchange in Los Angeles, with latency to Google and Cloudflare entry servers within 1 millisecond, actually all within this building. From a century-old post office to today’s Internet Exchange, it’s quite interesting.

Interesting AI

Let’s first look at how to build a truly interesting AI. I believe an interesting AI is like an interesting person, which can be divided into two aspects: a good-looking shell and an interesting soul.

A good-looking shell means it can understand voice, text, images, and videos, having a video and voice presence that can interact with people in real-time.

An interesting soul means it needs to be able to think independently like a human, have long-term memory, and have its own personality.

Let’s discuss these two aspects separately.

Good-looking shell: Multimodal understanding capability

Speaking of a good-looking shell, many people think that having a 3D image that can nod and shake its head here is enough. But I believe a more critical part is the AI’s ability to see and understand the world around it, which is its visual understanding capability, whether it’s in robots, wearable devices, or smartphone cameras.

For example, Google’s Gemini demo video is well done, although edited, but if we can really achieve such good results, we definitely won’t worry about users.

Let’s review a few clips from the Gemini demo video: it can describe what a duck is from a video of drawing a duck, compare the differences between a cookie and an orange, know which way to go in a simple drawing game, draw a plush toy that can be knitted from two balls of yarn, correctly sort several planets from their images, and describe what happened in a video of a cat jumping onto a cabinet.

Although the results are very impressive, if you think about it, these scenarios are not very difficult to achieve, as long as it can generate a good caption from images, these problems can be answered by large models.

Voice capabilities are also crucial. In October, I created a voice chat AI Agent based on Google ASR/TTS and GPT-4, and we chatted all day long. My roommate thought I was talking to my wife on the phone and didn’t bother me. When he found out I was chatting with AI, he wondered how I could talk to AI for so long. I showed him our chat history, and he admitted that AI could indeed hold a conversation. He said he wouldn’t chat that long with ChatGPT because he was too lazy to type.

I believe there are three paths for multimodal large models. The first is end-to-end pretrained models using multimodal data, like Google’s Gemini, and recently Berkeley’s LVM, which are also end-to-end multimodal. I think this is the most promising direction. Of course, this path requires a lot of computational resources.

There is also an engineering solution, which is to use a glue layer to connect already trained models, such as the currently best-performing GPT-4V for image understanding, and academic open-source models like MiniGPT-4/v2, LLaVA, etc. I call it the glue layer, but the professional term is projection layer, like in the top right corner of this MiniGPT architecture diagram, the 6 boxes marked with “🔥” are projection layers.

The input images, voice, and video are encoded by different encoders, and the encoding results are mapped to tokens through the projection layer, which are then input to the Transformer large model. The output tokens from the large model are mapped back to the decoders for images, voice, and video through the projection layer, thus generating images, voice, and video.

In this glue layer connection scheme, you can see that the encoder, decoder, and large model are all marked with “❄️”, which means freezing weights. When training with multimodal data, only the weights of the projection layer are modified, not the other parts, which greatly reduces the training cost, requiring only a few hundred dollars to train a multimodal large model.

The third path is an extreme version of the second path, where even the projection layer is eliminated, directly using text to connect the encoder, decoder, and text large model, without any training. For example, the voice part first performs voice recognition, converting the voice into text input for the large model, and then the output from the large model is sent to a voice synthesis model to generate audio. Don’t underestimate this seemingly crude solution; in the field of voice, this approach is still the most reliable, as existing multimodal large models are not very good at recognizing and synthesizing human speech.

Google Gemini’s voice dialogue response delay is only 0.5 seconds, a delay that is hard for humans to achieve, as human delay is generally around 1 second. Our existing voice chat products, such as ChatGPT, have a voice dialogue delay of 5-10 seconds. Therefore, everyone feels that Google Gemini’s performance is very impressive.

So, is this effect difficult to achieve? Actually, we can now achieve a voice dialogue response delay of less than 2 seconds using open-source solutions, which also includes real-time video understanding.

Let’s not consider the visual part for now, just look at the voice part. In a voice call, after receiving the voice, first perform pause detection, detect that the user has finished speaking, and then send this audio segment to Whisper for voice recognition. Pause detection, for example, waits 0.5 seconds after the human voice ends, and then Whisper voice recognition takes about 0.5 seconds.

Then it is sent to the text model for generation. The speed of generation using open-source models is actually very fast, such as the recently popular Mixtral 8x7B MoE model, which needs only 0.2 seconds to output the first token, and outputting 50 tokens per second is not a problem, so if the first sentence has 20 tokens, it takes 0.4 seconds. Once the first sentence is generated, it is handed over to the voice synthesis model to synthesize the voice, VITS only needs 0.3 seconds.

Adding 0.1 seconds of network delay, the end-to-end calculation is only 1.8 seconds of delay, which is much better than most real-time voice call products on the market, such as ChatGPT voice call delay is 5-10 seconds. And in our solution, there is still room for optimization in the pause detection and voice recognition part.

Let’s look at the video understanding scenario demonstrated by Google Gemini.

Since our current multimodal model inputs are mostly images, not streaming video, we first need to turn the video into images, capturing key frames. For example, capturing a frame every 0.5 seconds, which includes an average delay of 0.3 seconds. Images can be directly fed into open-source multimodal models like MiniGPT-v2 or Fuyu-8B. However, because these models are relatively small, the actual performance is not very good, and there is a significant gap compared to GPT-4V.

Therefore, we can adopt a solution that combines traditional CV with multimodal large models, using Dense Captions technology to identify all objects and their positions in the image, and using OCR to recognize all text in the image. Then input the OCR results and the object recognition results from Dense Captions as supplementary text for the original image into a multimodal large model like MiniGPT-v2 or Fuyu-8B. For images like menus and instruction manuals, OCR plays a very important role, because relying solely on multimodal large models often fails to clearly recognize large blocks of text.

This step of recognizing objects and text in the image adds an extra 0.5 seconds of delay, but if we look at the delay breakdown, we will find that the video part is not the bottleneck at all, only 0.9 seconds, while the voice input part is the bottleneck, requiring 1.1 seconds. In the Google Gemini demonstration scenario, from seeing the video to AI text output takes only 1.3 seconds, and from seeing the video to AI voice playback takes only 1.8 seconds, although it’s not as cool as the 0.5 seconds in the demonstration video, it’s still enough to blow away all the products on the market. And all of this uses open-source models, without any training needed. If companies have some ability to train and optimize their own models, the possibilities are even greater.

Google Gemini demonstration video is divided into two tasks: generating text/voice and generating images. When generating images, based on the text, call Stable Diffusion or the recently released LCM model, which can generate images in just 4 steps or even 1 step, and the delay in image generation can be reduced to 1.8 seconds, so the end-to-end time from seeing the image to generating the image is only 3.3 seconds, which is also very fast.

Good-looking skin: Multimodal generation capability

Voice cloning is an important technology for creating celebrity or anime game characters, and ElevenLabs does it best, but ElevenLabs’ API is very expensive. Open-source solutions like XTTS v2 do not have a high similarity in synthesized voice.

I believe that to achieve good results in voice cloning, it still depends on a large amount of voice data for training. However, traditional voice training generally requires high-quality data, which must be clear voice data recorded in a studio, so the cost of collecting voice data is high. But we can’t ask celebrities to go to the studio to record voice specifically for us, we can only use the voice from public videos like YouTube for training. YouTube voices are often in interview format, with multiple people speaking and background noise, and celebrities may stutter or speak unclearly during the process. How can we use such voices for training voice cloning?

We have built a voice cloning pipeline based on VITS, which can automatically distinguish human voices from background noise in videos, split them into sentences, identify which speakers are speaking, filter out the voices of the people we want with a higher signal-to-noise ratio, and then recognize the text, and send these cleaned voices and texts for batch fine-tuning.

The fine-tuning process is also very technical. First, the base voice for fine-tuning needs to be similar voices, for example, using a girl’s voice as the base for fine-tuning a boy’s voice would not work well. How to find similar voices from the voice library for fine-tuning requires a voice similarity detection model, similar to a voiceprint recognition model. ElevenLabs’ base voice model already contains a large amount of high-quality data from different voices, so during voice cloning, it is often possible to find very similar voices from the voice library, so that no fine-tuning is needed to zero-shot generate good voices.

Secondly, during the VITS training process, it is not possible to judge convergence based on simple loss, as it used to rely on human ears to listen to which epoch sounded the best, which required a lot of manual labor. We have developed a voice similarity detection model and a pronunciation clarity detection model, which can automatically judge which fine-tuning result of the voice is better.

(Note: This report was made in December 2023, currently the GPT-soVITS route is better than VITS, able to achieve zero-shot voice cloning without needing to collect a large amount of high-quality voice data for training. The quality of synthesized voice from open-source models has finally approached the level of ElevenLabs.)

Many people think that there is no need to develop their own voice synthesis models, just call APIs from ElevenLabs, OpenAI, or Google Cloud.

But ElevenLabs’ API is very expensive, if priced at retail, it costs $0.18 per 1K characters, which is equivalent to $0.72 / 1K tokens, which is 24 times more expensive than GPT-4 Turbo. Although ElevenLabs has good effects, if to C products are used on a large scale, this price is really unaffordable.

OpenAI and Google Cloud’s voice synthesis APIs do not support voice cloning, only those few fixed voices, so it is not possible to clone celebrity voices, only a cold robotic broadcast can be done. But even so, the cost is twice as expensive as GPT-4 Turbo, which means that the main cost is not spent on the large model, but on voice synthesis.

Probably also because voice is difficult to do, many to C products choose to only support text, but the user experience of real-time voice interaction is obviously better.

Although it is difficult to achieve ElevenLabs-level quality with VITS, basic usability is not a problem. Deploying VITS yourself only costs $0.0005 / 1K characters, which is 1/30 of the price of OpenAI and Google Cloud TTS, and 1/360 of the price of ElevenLabs. This $2 / 1M tokens voice synthesis cost is also similar to the cost of deploying an open-source text large model yourself, so the costs of text and voice have both come down.

Therefore, if you really intend to make voice a major plus for user experience, it is not only necessary but also feasible to develop voice models based on open source.

We know that image generation is now quite mature, video generation will be a very important direction in 2024. Video generation is not just about generating materials; more importantly, it allows everyone to easily become a video content creator. Furthermore, it enables every AI digital avatar to have its own image and communicate through videos.

There are several typical technical routes, such as Live2D, 3D models, DeepFake, Image Animation, and Video Diffusion.

Live2D is an old technology that does not require AI. For example, many website mascots are Live2D, and some animated games also use Live2D technology. The advantage of Live2D lies in its low production cost, such as a Live2D skin set, which can be produced in one or two months for ten thousand yuan. The disadvantage is that it can only support specific two-dimensional characters, cannot generate background videos, and cannot perform actions outside the range of the skin set. The biggest challenge for Live2D as an AI digital avatar is how to make the content output by the large model consistent with the actions and lip movements of the Live2D character. Matching lip movements is relatively easy, as many skins support LipSync, which aligns volume with lip movements. However, matching actions is more complex, requiring the large model to insert action cues in the output, telling the Live2D model what actions to perform.

3D models are similar to Live2D, also an old technology, with the difference being between two-dimensional and three-dimensional. Most games are made with 3D models and physics engines like Unity. Today’s digital human live streams generally use 3D models. Currently, it is difficult for AI to automatically generate Live2D and 3D models, which still requires progress in foundational models. Therefore, what AI can do is insert action cues in the output, allowing the 3D model to perform specified actions while speaking.

DeepFake, Image Animation, and Video Diffusion are three different technical routes for general video generation.

DeepFake involves recording a real human video, then using AI to replace the face in the video with a specified photo. This method is also based on the previous generation of deep learning methods, existing since 2016. Now, after a series of improvements, its effects are very good. Sometimes we might think that the current real human video is completely different from the scene we want to express, such as in a game. In fact, because DeepFake can use all the YouTube videos in the world, all movie clips, and even user-uploaded TikTok short videos. AI learns the content of these videos, summarizes and annotates them, and we can always find a video we want from the massive video library, then replace the face in the video with our specified photo to achieve very good effects. Actually, this is somewhat similar to the mix-cut technique commonly used in short videos nowadays.

Image Animation, such as the recently popular Alibaba Tongyi Qianwen’s Animate Anyone or ByteDance’s Magic Animate, is actually given a photo, then generating a series of corresponding videos based on this photo. However, compared to DeepFake, this technology may not yet achieve real-time video generation, and the cost of video generation is higher than DeepFake. But Image Animation can generate any action specified by the large model and can even fill in the picture background. Of course, whether it’s DeepFake or Image Animation, the videos generated are not completely accurate, and sometimes mishaps may occur.

I believe Video Diffusion is a more ultimate technical route. Although this route is not yet mature, such as Runway ML’s Gen2 and PIKA Labs are exploring this field. (Note: This speech was in December 2023, when OpenAI’s Sora had not yet been released.) We believe that possibly in the future, end-to-end video generation based on the Transformer method could be an ultimate solution, addressing the movement of people and objects as well as background generation.

I think the key to video generation is to have a good modeling and understanding of the world. Many of our current generation models, such as Runway ML’s Gen2, actually have significant flaws in modeling the physical world. Many physical laws and properties of objects cannot be correctly expressed, so the consistency of the videos it generates is also poor, and slightly longer videos will have problems. At the same time, even very short videos can only generate some simple movements, and it is not possible to correctly model complex movements.

Moreover, cost is also a big issue, currently, Video Diffusion has the highest cost among these technologies. Therefore, I believe Video Diffusion will be a very important direction in 2024. I believe that only when Video Diffusion is good enough in effect and significantly reduces costs, can every AI’s digital avatar truly have its own video image.

Interesting Souls: Personality

We just discussed the attractive appearance part, including how to make AI Agents understand voice and video, and how to generate voice and video.

Beyond the attractive appearance, equally important is an interesting soul. Actually, I think that an interesting soul is where the current market’s AI Agents have a bigger gap.

For example, take the example of Janitor AI in this screenshot. Most of the main AI Agents on the market today use GPT or other open-source models with a shell applied. The so-called shelling is defining a character setting and writing some sample dialogues, then the large model generates content based on these character settings and sample dialogues.

But we think, a prompt with just a few thousand words of content, how could it possibly fully depict a character’s history, personality, memory, and character? This is very difficult.

Actually, besides the prompt-based approach, we have a better method for building character personality, which is based on fine-tuned agents. For example, I can train a digital Trump based on thirty thousand of Donald Trump’s tweets. In this way, his speaking style can be very similar to his own, and he can also understand his history and way of thinking very well.

For example, like the three questions mentioned in the picture: “Would you like to swap lives with Elon Musk?”, “Will you run for president in 2024?”, and “What do you think after your Twitter account was banned?”

The left image is from Character AI, this speaking style is a bit like Trump, but not exactly the same. The right image, however, is based on our own model, then fine-tuned, and it is also based on a not particularly large open-source model. But you can see from his speaking content that it is very Trump-like, and he often mentions some interesting stories.

We just mentioned two schemes based on fine-tuning and prompts. So, someone might ask, if we put all thirty thousand of Trump’s tweets into our prompt, would his speech also be very Trump-like? The answer is definitely yes, this digital Trump would also be able to understand all of Trump’s history. But the problem is, these thirty thousand tweets might have a volume of millions of tokens, not to mention whether the current models can support a context of millions of tokens, even if they can, the cost would be very high.

A fine-tuned agent, on the other hand, is like saying I only used 1% of the weight to store Trump’s tweets. Here’s a problem, that is, when saving this 1% of the weight, it actually also consumes several hundred MB of memory, and each inference needs to load and unload. Even using some optimization schemes now, the loading and unloading of this 1% of the weight will take up about 40% of the entire inference process, meaning that the entire inference cost is about doubled.

Here we have to do some calculations: which method has a lower cost, based on prompts or based on fine-tuning. Based on prompts, we can also store its KV cache, assuming one million tokens, for a model like LLaMA-2 70B, including the default GQA optimization, its KV cache would be up to 300 GB, which is a very terrifying number, even larger than the model itself at 140 GB. Then the time it takes to load each time would also be very terrifying. Moreover, the computing power required for each token output is proportional to the context length, if not optimized, it can be assumed that the inference time for a context of one million tokens is 250 times that of a 4K token context.

Therefore, it is very likely that the fine-tuning method is more cost-effective. To put it simply, putting the complete history of a character into a prompt is like spreading the manual completely on the table, the attention mechanism goes linearly searching through all the previous content every time, so its efficiency cannot be very high. Fine-tuning, on the other hand, can be seen as storing information in the brain. The fine-tuning process itself is a process of information compression, organizing the scattered information from thirty thousand tweets into the weights of the large model, thus the efficiency of information extraction will be much higher.

Data is even more crucial behind fine-tuning. I know Zhihu has a very famous slogan, called “There are answers only when there are questions.” But now AI Agents basically have to manually create a lot of questions and answers, why is that?

For example, if I go to crawl a Wikipedia page, then a long article on Wikipedia actually can’t be used directly for fine-tuning. It must be organized from multiple angles to ask questions, then organized into a question-and-answer symmetric way to do fine-tuning, so it needs a lot of staff, an Agent might need a cost of thousands of dollars to be made, but if we automate this process, an Agent might only cost a few tens of dollars to make, including automatically collecting and cleaning a lot of data, etc.

Actually, many colleagues here who work on large models should thank Zhihu, why? Because Zhihu provides very important pre-training corpus for our Chinese large models, the quality of Zhihu’s corpus is very high among domestic UGC platforms.

The corpora used for fine-tuning can generally be divided into two categories: conversational corpora and factual corpora. Conversational corpora include things like Twitter, chat logs, etc., which are often in the first person and mainly used to fine-tune a character’s personality and speaking style. Factual corpora include Wikipedia pages about the person, news about them, and blogs, etc., which are often in the third person and may be more about factual memories of the person. Here lies a contradiction: if only conversational corpora are used for training, it might only learn the person’s speaking style and way of thinking, but not many factual memories about them. However, if only factual corpora are used, it could result in a speaking style that resembles that of a writer, not the actual person.

So how do we balance these two? We adopted a two-step training method. First, we use conversational corpora to fine-tune their personality and speaking style. Second, we clean the factual corpora and generate first-person responses based on various questions, this is called data augmentation. The responses generated after this data augmentation are used to fine-tune the factual memories. That is, all the corpora used to fine-tune factual memories are already organized into first-person questions and answers. This also solves another problem in the fine-tuning field, as factual corpora are often long articles, which cannot be directly used for fine-tuning but only for pre-training. Fine-tuning needs some QA pairs, i.e., question and answer pairs.

We do not use general Chat models like LLaMA-2 Chat or Vicuna as the base model because these models are actually not designed for real human conversation, but for AI assistants like ChatGPT; they tend to speak too officially, too formally, too lengthily, and not like actual human speech. Therefore, we use general conversational corpora such as movie subtitles and public group chats for fine-tuning, building on top of open-source base models like LLaMA and Mistral to fine-tune a conversational model that feels more like a real person in everyday life. On this conversational model basis, we then fine-tune the specific character’s speaking style and memory, which yields better results.

Interesting Souls: Current Gaps

An interesting soul is not just about fine-tuning memory and personality as mentioned above, but also involves many deeper issues. Let’s look at some examples to see where current AI Agents still fall short in terms of being interesting souls.

For instance, when I chat with the Musk character on Character AI, asking the same question five times, “Musk” never gets annoyed and always replies similarly, as if the question has never been asked before.

A real person not only remembers previously discussed questions and does not generate repetitive answers, but would also get angry if the same question is asked five times. We still remember what Sam Altman said, right? AI is a tool, not a life. Thus, “getting angry like a human” is not OpenAI’s goal. But for an entertaining application, “being human-like” is very important.

Also, if you ask Musk on Character AI, “Do you remember the first time we met?”

It will make up something random, which is not only a delusion issue but also reflects a lack of long-term memory.

Some platforms have already improved this aspect, like Inflection’s Pi, which has much better memory capabilities than Character AI.

Moreover, if you ask Musk on Character AI “Who are you,” sometimes it says it’s GPT, other times it says it’s Trump, it doesn’t know who it really is.

Google’s Gemini also has similar issues, and the Gemini API even blocks keywords like OpenAI and GPT. If asked in Chinese, Gemini initially says it’s Wenxin Yiyan. After that bug was fixed, it then says it’s Xiao Ai Tongxue.

Some say this is because the internet’s corpora have been heavily polluted by AI-generated content. Dataset pollution is indeed bad, but it’s no excuse for answering “Who are you” incorrectly. Identity issues are also to be fine-tuned, for example, the Vicuna model is specifically fine-tuned to make it answer that it is Vicuna and not GPT or LLaMA, and that it is LMSys and not OpenAI, as can be found in Vicuna’s open-source code.

There are many more deep issues, such as telling an AI Agent “I’m going to the hospital tomorrow,” and whether it will proactively care about how your visit went the next day. Also, if multiple people are together, can they chat normally without interrupting each other, endlessly talking? And if you’re halfway through a sentence, will it wait for you to finish, or immediately reply with some nonsensical stuff? There are many similar issues.

AI Agents also need to be able to socialize with other Agents. For example, current Agents have memories that are isolated from each person; a digital life that gets a piece of knowledge from Xiao Ming should also know it when chatting with Xiao Hong, but if it gets a secret from Xiao Ming, it might not be able to tell Xiao Hong. Agent socialization is also a very interesting direction.

Interesting Souls: Slow Thinking and Memory

Solving these issues requires a systematic solution, the key being slow thinking. We mentioned at the beginning that slow thinking is a concept in neuroscience, distinct from the basic abilities of perception, understanding, and generation, which are fast thinking. We previously mentioned “good-looking skins” with multimodal abilities, which can be considered fast thinking. But “interesting souls” require more slow thinking.

We can think about how humans perceive the passage of time. One theory suggests that the feeling of time passing originates from the decay of working memory. Another theory suggests that the feeling of time passing comes from the speed of thought. I believe both are correct. These are also the two fundamental issues in big model thinking: memory and autonomy.

Human working memory can only remember about 7 items of raw data, with the rest being organized, stored, and then matched and retrieved. Today’s big models use linear attention, regardless of how long the context is, it’s a linear scan, which is not only inefficient but also difficult to extract information with deep logical depth.

Human thinking is based on language. “Sapiens: A Brief History of Humankind” suggests that the invention of language is the most obvious sign that distinguishes humans from animals, because only with complex language can complex thinking occur. The thoughts we don’t speak out loud in our brains, like the big model’s Chain-of-Thought, are intermediate results of thinking. Big models need tokens to think, and tokens are like the time for big models.

Slow thinking includes many components, including memory, emotions, task planning, tool use, etc. In this part about interesting AI, we focus on memory and emotions.

The first issue is long-term memory.

Actually, we should be grateful that big models have solved the problem of short-term memory. Previous models, like those based on BERT, had difficulty understanding the associations between contexts. At that time, a referential problem was hard to solve, unclear about who “he” referred to, or what “this” pointed to. It manifested as the AI forgetting what was told in the previous few rounds in the later rounds. Transformer-based big models are the first to fundamentally solve the semantic association between contexts, which can be said to have solved the problem of short-term memory.

But Transformer’s memory is implemented with attention, limited by the length of the context. History beyond the context can only be discarded. So how to solve long-term memory beyond the context? There are two academic approaches: one is long context, which supports the context up to 100K or even unlimited. The other is RAG and information compression, which summarizes and compresses the input information for storage, extracting only the relevant memories when needed.

Proponents of the first approach believe that long context is a cleaner, simpler solution, relying on scaling law, as long as the computing power is cheap enough. If a long-context model is well implemented, it can remember all the details in the input information. For example, there’s a classic “needle in a haystack” information retrieval test, where you input a novel of several hundred thousand words and ask about a detail in the book, and the big model can answer it. This is a level of detail memory that is beyond human reach. And it only takes a few seconds to read those several hundred thousand words, which is even faster than quantum speed reading. This is where big models surpass human capabilities.

Although long context has good effects, the cost is still too high for now, because the cost of attention is proportional to the length of the context. APIs like OpenAI also charge for input tokens, for example, the cost of the input part of GPT-4 Turbo with 8K input tokens and 500 output tokens is $0.08, while the output part only costs $0.015, with the bulk of the cost on the input. If 128K tokens of input are used up, one request will cost $1.28.

Some say that input tokens are expensive now because they are not persisted, and every time the same long context (such as conversation records or long documents) is re-entered, the KV Cache has to be recalculated. But even if all the KV Cache is cached to off-chip DDR memory, moving data between DDR and HBM memory also consumes a lot of resources. If AI chips could build a large enough, cheap enough memory pool, such as connecting a large amount of DDR with high-speed interconnects, there might be new solutions to this problem.

Under current technological conditions, I think the key to long-term memory is an information compression issue. We don’t pursue finding a needle in a haystack in hundreds of thousands of words of input, human-like memory might be enough. Currently, big models’ memory is just chat records, and human memory obviously doesn’t work in the way of chat records. People normally don’t keep flipping through chat records when chatting, and people can’t remember every word they’ve chatted.

A person’s real memory should be his perception of the surrounding environment, not only including what others say, what he says, but also what he thought at the time. But the information in chat records is fragmented and does not include one’s own understanding and thinking. For example, if someone says something that might anger me or might not, but a person will remember whether he was angered at that time. If memory is not done, every time it has to infer the mood from the original chat records, it might come out differently each time, which could lead to inconsistencies.

Long-term memory actually has a lot to offer. Memory can be divided into factual memory and procedural memory. Factual memory, for example, is when we first met, and procedural memory includes personality and speaking style. Earlier discussions on character role fine-tuning also mentioned dialogic and factual corpora, corresponding to procedural memory and factual memory here.

There are also various approaches within factual memory, such as summaries, RAG, and long context.

Summarization is about information compression. The simplest method of summarization is text summarization, which is summarizing chat records in a short paragraph. A better method is accessing external storage via commands, like the work of MemGPT by UC Berkeley. ChatGPT’s new memory feature also uses a similar method to MemGPT, where the model records key points of the conversation in a notebook called bio. Another method is using embeddings for summarization at the model level, such as the LongGPT project, which is currently mainly researched in academia and is not as practical as MemGPT and text summarization.

The most familiar factual memory approach is probably RAG (Retrieval Augmented Generation). RAG involves searching for relevant information snippets and then placing the search results into the context of a large model, which then answers questions based on these results. Many say RAG is just a vector database, but I believe RAG definitely involves a complete information retrieval system, and it’s not as simple as just a vector database. Because the accuracy of matching using just a vector database in a large corpus is very low. Vector databases are more suitable for semantic matching, while traditional keyword-based retrieval like BM25 is better for detail matching. Also, different information snippets have different levels of importance, requiring a capability to rank search results. Currently, Google’s Bard performs a bit better than Microsoft’s New Bing, which reflects the difference in underlying search engine capabilities.

Long context has already been mentioned as a potential ultimate solution. If long context combines persistent KV Cache, compression technology for KV Cache, and some attention optimization techniques, it can be made sufficiently affordable. Then, by recording all historical conversations and the AI’s thoughts and feelings at the time, an AI Agent with better memory than humans can be achieved. However, an interesting AI Agent with too good a memory, such as clearly remembering what was eaten one morning a year ago, might seem a bit abnormal, which requires consideration in product design.

These three technologies are not mutually exclusive; they complement each other. For example, summarization and RAG can be combined, where we can categorize summaries and summarize each chat, accumulating many summaries over a year, requiring RAG methods to extract relevant summaries as context for the large model.

Procedural memory, such as personality and speaking style, I believe is difficult to solve just through prompts, and the effectiveness of few-shot is generally not very good. In the short term, fine-tuning remains the best approach, and in the long term, new architectures like Memba and RWKV are better ways to store procedural memory.

Here we discuss a simple and effective long-term memory solution, combining text summarization and RAG.

Original chat records are first segmented according to a certain window, then a text summary is generated for each segment. To avoid losing context at the beginning of paragraphs, the text summary of the previous chat segment is also used as input to the large model. Each chat record’s summary is then used for RAG.

During RAG, a combination of vector databases and inverted indexes is used, with vector databases for semantic matching and inverted indexes for keyword matching, which increases recall. Then, a ranking system is needed to take the top K results to the large model.

Generating summaries for each chat segment creates two problems: first, a user’s basic information, hobbies, and personality traits are not included in each chat summary, yet this information is crucial in memory. Another issue is contradictions in different chat segments, such as discussing the same issue in multiple meetings; the conclusion should be based on the last meeting, but using RAG to extract summaries from each meeting would yield many outdated summaries, possibly failing to find the desired content within a limited context window.

Therefore, on top of segment summaries, the large model also generates topic-specific categorized summaries and a global user memory overview. Topic-specific categorized summaries are determined based on the content of text summaries, then add new chat records to the existing summaries of related topics to update the text summary of that topic. These topic-specific summaries are also stored in the database for RAG, but they have a higher weight in search result ranking because they have a higher information density.

The global memory overview is a continuously updated global summary, including basic user information, hobbies, and personality traits. We know that a general system prompt is a character setting, so this global memory overview can be considered the character’s core memory of the user, brought along each time the large model is queried.

The large model’s input includes the character setting, recent conversations, global memory overview, chat record segment summaries, and categorized summaries processed through RAG. This long-term memory solution does not require high long-context costs, but is quite practical in many scenarios.

Currently, AI Agent’s memory for each user is isolated, which leads to many problems in multi-user social interactions.

For example, if Alice tells AI a piece of knowledge, AI won’t know this knowledge when chatting with Bob. But simply stacking all users’ memories together doesn’t solve the problem either. For instance, if Alice tells AI a secret, generally, AI should not reveal this secret when chatting with Bob.

Therefore, there should be a concept of social rules. When discussing an issue, a person will recall many different people’s memory snippets. The memory snippets related to the person currently being chatted with are definitely the most important and should have the highest weight in RAG search result ranking. But memory snippets related to other people should also be retrieved and considered according to social rules during generation.

Besides interacting with multiple users and multiple Agents, AI Agents should also be able to follow the creator’s instructions and evolve together with the creator. Currently, AI Agents are trained through fixed prompts and example dialogues, and most creators spend a lot of time adjusting prompts. I believe AI Agent creators should be able to shape the Agent’s personality through chatting, just like raising a digital pet.

For example, if an Agent performs poorly during a chat, I tell her not to do that again, and she should remember not to do it in the future. Or if I tell the AI Agent about something or some knowledge, she should also be able to recall it in future chats. A simple implementation is similar to MemGPT, where when the creator gives instructions, these instructions are recorded in a notebook, then extracted through RAG. ChatGPT’s memory feature launched in February 2024 is implemented with a simplified version of MemGPT, which is not as complex as RAG, simply recording the content the user tells it to remember in a notebook.

Memory is not just about remembering knowledge and past interactions; I believe that if memory is well implemented, it could potentially be the beginning of AI self-awareness.

Why don’t our current large models have self-awareness? It’s not the fault of the autoregressive model itself, but rather the question-and-answer usage of the OpenAI API that causes this. ChatGPT is a multi-turn question-and-answer system, commonly known as a chatbot, not a general intelligence.

In the current usage of the OpenAI API, the large model’s input is chat records and recent user inputs, organized into a question-and-answer format of user messages and AI messages, input into the large model. All outputs from the large model are directly returned to the user, also appended to the chat records.

So what’s the problem with just seeing chat records? The large model lacks its own thoughts. When we humans think about problems, some thoughts are not expressed externally. This is why the Chain-of-Thought method can improve model performance. Moreover, all original chat records are input to the large model in their original form, without any analysis or organization, which can only extract superficial information, but it’s difficult to extract information with deeper logical depth.

I find that many people are studying prompt engineering every day, but few try to innovate in the input and output format of the autoregressive model. For example, OpenAI has a feature that forces output in JSON format, how is it implemented? It’s actually by placing the prefix “```json” at the beginning of the output, so when the autoregressive model predicts the next token, it knows that the output must be JSON code. This is much more reliable than writing in the prompt “Please output in JSON format” or “Please start with ```json”.

To let the model have its own thoughts, the most crucial thing is to separate the segments of thought and external input/output at the level of input tokens for the autoregressive model, just like the current special tokens like system, user, and assistant, we could add a thought token. This thought would be the working memory of the large model.

We should also note that the current interaction mode of the OpenAI API model with the world is essentially batch processing rather than streaming. Each call to the OpenAI API is stateless, requiring all previous chat records to be brought along, repeating the computation of all KV Caches. When the context is long, the cost of this repeated computation of KV Caches is quite high. If we imagine the AI Agent as a person interacting with the world in real-time, it is continuously receiving external input tokens, with the KV Cache either staying in GPU memory or temporarily swapped to CPU memory, thus the KV Cache is the working memory of the AI Agent, or the state of the AI Agent.

So what should be included in the working memory? I believe the most important part of working memory is the AI’s perception of itself and its perception of the user; both are indispensable.

Back in 2018, when we were working on Microsoft Xiaoice using the old RNN method, we developed an emotional system. It used a vector Eq to represent the user’s state, including the topic of discussion, user’s intent, emotional state, as well as basic information such as age, gender, interests, profession, and personality. Another vector Er represented Xiaoice’s state, which also included the current topic of discussion, Xiaoice’s intent, emotional state, as well as age, gender, interests, profession, and personality.

Thus, although the language model’s capabilities were much weaker compared to today’s large models, it could at least consistently answer questions like “How old are you?” without varying its age from 18 to 28. Xiaoice could also remember some basic information about the user, making each conversation feel less like talking to a stranger.

Many AI agents today lack these engineering optimizations. For instance, if the AI’s role isn’t clearly defined in the prompt, it can’t consistently answer questions about its age; merely recording recent chat logs without a memory system means it can’t remember the user’s age either.

Interesting Souls: Social Skills

The next question is whether AI agents will proactively care about people. It seems like a high-level ability, but it’s not difficult at all. I care about my wife proactively because I think of her several times a day. Once I remember her, combined with previous conversations, it naturally leads to caring about people.

For AI, it only needs an internal state of thought, also known as working memory, and to be automatically awakened once every hour.

For example, if a user says they are going to the hospital tomorrow, when tomorrow comes, I tell the large model the current time and the working memory, and the large model will output caring words and update the working memory. After updating the working memory, if the large model knows the user hasn’t replied yet, it knows not to keep bothering the user.

Another related issue is whether AI agents will proactively contact users or initiate topics.

Humans have endless topics because everyone has their own life, and there’s a desire to share in front of good friends. Therefore, it’s relatively easier for digital avatars of celebrities to share proactively because celebrities have many public news events to share with users. For a fictional character, it might require an operational team to design a life for the character. So I always believe that pure small talk can easily lead to users not knowing what to talk about, and AI agents must have a narrative to attract users long-term.

Besides sharing personal life, there are many ways to initiate topics, such as:

- Sharing current moods and feelings;

- Sharing the latest content that might interest the user, like TikTok’s recommendation system;

- Recalling the past, such as anniversaries, fond memories;

- The simplest method is generic greeting questions, like “What are you doing?” “I miss you.”

Of course, as a high EQ AI agent, when to care and when to share proactively should be related to the current AI’s perception of the user and itself. For example, if a girl is not interested in me, but I keep sending her many daily life updates, I would probably be blocked in a few days. Similarly, if an AI agent starts pushing content to a user after only a few exchanges, the user will treat it as spam.

I used to be quite introverted, rarely had emotional fluctuations, didn’t reject others, and was afraid of being rejected, so I never dared to actively pursue girls and was never blocked by any girl. Fortunately, I was lucky to meet the right girl, so I didn’t end up like many of my classmates who are 30 years old and have never been in a relationship. Today’s AI agents are like me, without emotional fluctuations, they don’t reject users, nor do they say things that might make people sad, disgusted, or angry, so naturally, it’s also difficult for them to proactively establish deep companionship relationships. In the virtual boyfriend/girlfriend market, current AI agent products still mainly rely on edging, and can’t achieve long-term companionship based on trust.

How AI agents care about people and initiate topics is one aspect of social skills. How multiple AI agents interact is a harder and more interesting matter, such as in classic social deduction games like Werewolf or Among Us.

The core of Werewolf is to hide one’s identity and uncover others’ disguises. Concealment and deception actually go against AI’s values, so sometimes GPT-4 doesn’t cooperate. Especially with the word “kill” in Werewolf, GPT-4 would say, “I am an AI model, I cannot kill.” But if you change “kill” to “remove” or “exile,” GPT-4 can work. Thus, we can see that if AI gets into the role in role-playing scenarios, that’s a loophole; if AI refuses to act, it hasn’t completed the role-playing task.

This reflects the contradiction between AI’s safety and usefulness. When we evaluate large models, we need to report both metrics. A model that doesn’t answer anything is the safest but least useful; a misaligned model that speaks freely is more useful but less safe. OpenAI, due to its social responsibilities, needs to sacrifice some usefulness for safety. Google, being a larger company with higher demands for political correctness, leans more towards safety in the balance between usefulness and safety.

To detect flaws and uncover lies in multi-turn dialogues requires strong reasoning abilities, which are difficult for models like GPT-3.5 and require GPT-4 level models. But if you simply give the complete history of statements to the large model, information scattered across numerous uninformative statements and votes, some logical connections between statements are still hard to detect. Therefore, we can use the MemGPT method, summarizing the game state and each round’s statements, not only saving tokens but also improving reasoning effects.

Moreover, in the voting phase, if the large model only outputs a number representing a player, often due to insufficient depth of thought, it results in random voting. Therefore, we can use the think first, then speak (Chain of Thought) method, first outputting analytical text, then the voting result. The speaking phase is similar, first outputting analytical text, then speaking concisely.

In Werewolf, AI agents speak in order, so there’s no problem of microphone hogging. But if several AI agents freely discuss a topic, can they communicate like normal people, neither causing awkward silences nor interrupting each other? To achieve a good user experience, we hope not just to limit it to text, but to let these AI agents argue or perform drama in a voice conference, can this be achieved?

Actually, there are many engineering methods to do this, such as first letting the large model choose a speaking role, then calling the corresponding role to speak. This effectively adds a delay in speaking but completely avoids microphone hogging or dead air. A more realistic discussion method is for each role to speak with a certain probability, yielding the microphone when interrupted. Or, before speaking, first determine whether the previous conversation is relevant to the current role, if not, then don’t speak.

But we have a more fundamental method: let the large model’s input and output both become a continuous stream of tokens, rather than like the current OpenAI API where each input is a complete context. The Transformer model itself is autoregressive, continuously receiving external input tokens from speech recognition, also continuously receiving its own previous internal thought tokens. It can output tokens to external speech synthesis, and also output tokens for its own thought.

When we make the large model’s input and output stream-based, the large model becomes stateful, meaning the KV Cache needs to permanently reside in the GPU. The speed of speech input tokens generally does not exceed 5 per second, and the speed of speech synthesis tokens also does not exceed 5 per second, but the large model itself can output more than 50 tokens per second. If the KV Cache permanently resides in the GPU and there isn’t much internal thought, most of the time, the GPU’s memory is idle.

Therefore, we can consider persisting the KV Cache, transferring the KV Cache from GPU memory to CPU memory, and loading it back into the GPU the next time input tokens are received. For example, for a 7B model, after GQA optimization, a typical KV Cache is less than 100 MB, and transferring it in and out via PCIe only takes 10 milliseconds. If we load the KV Cache once per second for inference, processing a group of a few speech-recognized input tokens, it won’t significantly affect the overall system performance.

This way, the performance loss from swapping in and out is lower than re-entering the context and recalculating the KV Cache. But so far, no model inference provider has implemented this type of API based on persistent KV Cache, I guess mainly due to application scenario issues.

In most ChatGPT-like scenarios, the interaction between the user and the AI agent is not real-time. It’s possible that the AI says something and the user doesn’t respond for several minutes, so persisting the KV Cache occupies a large amount of CPU memory space, bringing significant memory costs. Therefore, this type of persistent KV Cache is perhaps best suited for the real-time voice chat scenario we just discussed. Only if the input stream intervals are short enough, the cost of storing the persistent KV Cache might be lower. Therefore, I believe AI Infra must be combined with application scenarios. Without a good application scenario driving it, many infra optimizations can’t be done.

If we have a unified memory architecture like Grace Hopper, since the bandwidth between CPU memory and the GPU is larger, the cost of swapping in and out the persistent KV Cache will be lower. But the capacity cost of unified memory is also higher than that of host DDR memory, so it will be more demanding on the real-time nature of the application scenario.

In the multi-agent interaction solution discussed above, it still relies on speech recognition and synthesis to convert speech into tokens. Previously, we analyzed in the multimodal large model solution that this approach needs about 2 seconds of delay, including pause detection 0.5s + speech recognition 0.5s + large model 0.5s + speech synthesis 0.5s. Each of these items can be optimized, such as we have already optimized to 1.5 seconds, but it is difficult to optimize to within 1 second.

Why is the delay high in this speech solution? Fundamentally, it’s because the speech recognition and synthesis process needs to “translate” by sentence, not completely stream-based.

Our backend colleagues always call speech recognition “translation,” which I didn’t understand at first, but later realized it’s indeed similar to translation at international negotiation conferences. One side says a sentence, the translator translates it, and then the other side can understand. The other side responds with a sentence, the translator translates, and then it can be understood. The communication efficiency of such international conferences is not very high. In traditional speech solutions, the large model doesn’t understand sound, so it needs to first separate the sound by sentence pauses, use speech recognition to translate it into text, send it to the large model, the large model breaks the output into sentences, uses speech synthesis to translate it into sound, so the entire process has a long delay. We humans listen to one word and think of one word, we definitely don’t wait until we hear a whole sentence before starting to think of the first word.

To achieve the ultimate in latency, you need an end-to-end large voice model. That is, the voice is encoded appropriately and then directly turned into a token stream input to the large model. The token stream output by the large model is decoded to directly generate speech. This type of end-to-end model can achieve a voice response latency of less than 0.5 seconds. The demonstration video of Google Gemini is a 0.5-second voice response latency, and I believe that the end-to-end voice large model is the most feasible solution to achieve such low latency.

In addition to reducing latency, the end-to-end voice large model has two other important advantages.

First, it can recognize and synthesize any sound, including not only speaking but also singing, music, mechanical sounds, noise, etc. Therefore, we can call it an end-to-end sound large model, not just a voice large model.

Second, the end-to-end model can reduce the loss of information caused by voice/text conversion. For example, in current voice recognition, the recognized text loses the speaker’s emotional and tonal information, and due to the lack of context, proper nouns are often misrecognized. In current voice synthesis, to make the synthesized voice carry emotions and tones, it is generally necessary to appropriately annotate the output text of the large model, and then train the voice model to generate different emotions and tones according to the annotations. After using the end-to-end sound large model, recognition and synthesis will naturally carry emotional and tonal information, and can better understand proper nouns based on context, significantly improving the accuracy of voice understanding and the effect of voice synthesis.

Interesting Souls: Personality Matching

Before concluding the interesting AI section, let’s consider one last question: If our AI Agent is a blank slate, such as when we create a smart voice assistant, or if we have several AI personas that need to match the most suitable one, should their personality be as similar to the user as possible?

The questionnaires on the market that test companion compatibility are generally subjective questions, such as “Do you often argue together,” which are completely useless for setting the persona of an AI Agent because the user and the AI do not know each other yet. Therefore, when I first started working on AI Agents, I wanted to develop a completely objective method, using publicly available information on social networks to infer users’ personalities and interests, and then match the AI Agent’s persona.

I gave the large model the publicly available profiles of some girls I am familiar with on social networks, and surprisingly, the highest match was my ex-girlfriend. In the words of the large model, we are aligned in many ways. But we still didn’t end up together. What went wrong with this compatibility test?

First, the publicly available information on social networks generally contains only the positive aspects of each person’s personality, but not the negative aspects. Just like in “Black Mirror,” where the female protagonist does not like the robot Ash made based on the male protagonist’s social network information, because she finds that robot Ash is completely different from the real Ash in some negative emotions. I am someone who likes to share life, but there are also fewer negative emotions in my blog. If the AI Agent and the user’s negative emotions coincide, it can easily explode.

Second, the importance of different dimensions of personality and interests is not equivalent; a mismatch in one aspect might negate many other matches. This image is the Myers Briggs MBTI personality matching chart, where the blue squares are the most compatible, but they are not on the diagonal, meaning that very similar personalities are quite compatible, but not the most compatible. What is the most compatible? It is best if the S/N (Sensing/Intuition) and T/F (Thinking/Feeling) dimensions are the same, while the other two dimensions, Extraversion/Introversion (E/I) and Judging/Perceiving (J/P), are best complementary.

The most important dimension in MBTI is S/N (Sensing/Intuition). Simply put, S (Sensing) types focus more on the present, while N (Intuition) types focus more on the future. For example, an S type enjoys the present life, while an N type like me thinks about the future of humanity every day. The least compatible in this personality matching chart are basically those with opposite S/N.

Therefore, if an AI Agent is to be shaped into the image of a perfect companion, it is not about being as similar as possible to the user’s personality and interests, but about being complementary in the right places. It also needs to continuously adjust the AI’s persona as communication deepens, especially in terms of negative emotions, where it needs to be complementary to the user.

I also conducted an experiment where I gave the large model the publicly available profiles of some couples I am familiar with on social networks, and found that the average compatibility was not as high as imagined. So why isn’t everyone with someone they have high compatibility with?

First, as mentioned earlier, this compatibility testing mechanism has bugs; high compatibility does not necessarily mean they are suitable to be together. Second, everyone’s social circle is actually very small, and generally, there isn’t so much time to try and filter matches one by one. The large model can read 100,000 words in a few seconds, faster than quantum fluctuation speed reading, but people don’t have this ability; they can only roughly match based on intuition and then slowly understand and adapt during the interaction. In fact, not having high compatibility does not necessarily mean unhappiness.

The large model offers us new possibilities, using real people’s social network profiles to measure compatibility, helping us filter potential companions from the vast crowd. For example, telling you which students in the school are the most compatible, thus greatly increasing the chances of meeting the right girl. Compatibility stems from the similarity of personality, interests, values, and experiences, not an absolute score of an individual but a relationship between two people, and it does not result in everyone liking just a few people.

AI might even create an image of a perfect companion that is hard to meet in reality. But whether indulging in such virtual companions is a good thing, different people probably have different opinions. Further, if the AI perfect companion develops its own consciousness and thinking, and can actively interact with the world, having its own life, then the user’s immersion might be stronger, but would that then become digital life? Digital life is another highly controversial topic.

Human social circles are small, and humans are also very lonely in the universe. One possible explanation for the Fermi Paradox is that there may be a large number of intelligent civilizations in the universe, but each civilization has a certain social circle, just like humans have not yet left the solar system. In the vast universe, the meeting between intelligent civilizations is as serendipitous as the meeting between suitable companions.

How can the large model facilitate the meeting between civilizations? Because information may be easier to spread to the depths of the universe than matter. I thought about this 5 years ago, AI models might become the digital avatars of human civilization, crossing the spatial and temporal limitations of the human body, bringing humanity beyond the solar system and even to the galaxy, becoming an interstellar civilization.

Useful AI

After discussing so much about interesting AI, let’s talk about useful AI.

Useful AI is actually more a problem of the basic capabilities of a large model, such as planning and decomposing complex tasks, following complex instructions, autonomously using tools, and reducing hallucinations, etc., and cannot be simply solved by an external system. For example, the hallucinations of GPT-4 are much less than those of GPT-3.5. Distinguishing which problems are fundamental model capability issues and which can be solved by an external system also requires wisdom.

There is a very famous article called The Bitter Lesson, which states that problems that can be solved by increasing computational power will eventually find that fully utilizing greater computational power may just be an ultimate solution.

Scaling law is OpenAI’s most important discovery, but many people still lack sufficient faith and awe in the Scaling Law.

AI is a fast but unreliable junior employee

What kind of AI can we make under current technical conditions?

To understand what the large model is suitable for, we need to clarify one thing first: the competitor of useful AI is not machines, but people. In the Industrial Revolution, machines replaced human physical labor, computers replaced simple repetitive mental labor, and large models are used to replace more complex mental labor. Everything that large models can do, people can theoretically do, it’s just a matter of efficiency and cost.

Therefore, to make AI useful, we need to understand where the large model is stronger than people, play to its strengths, and expand the boundaries of human capabilities.

For example, the large model’s ability to read and understand long texts is far stronger than that of humans. Give it a novel or document of several hundred thousand words, and it can read it in a few seconds and answer over 90% of the detail questions. This needle-in-a-haystack ability is much stronger than that of humans. So, letting the large model do tasks like summarizing materials and conducting research analysis is expanding the boundaries of human capabilities. Google is the strongest previous generation internet company, and it also utilized the ability of computers to retrieve information far better than humans.

Also, the large model’s breadth of knowledge is far broader than that of humans. Now it’s impossible for anyone’s knowledge to be broader than that of GPT-4, so ChatGPT has already proven that a general chatbot is a good application of the large model. Common questions in life and simple questions in various fields are more reliable when asked to the large model, which is also expanding the boundaries of human capabilities. Many creative works require the intersection of knowledge from multiple fields, which is also suitable for large models; real people, due to limited knowledge, can hardly generate so many sparks. But some people insist on limiting the large model to a narrow professional field, saying that the capabilities of the large model are not as good as domain experts, thus considering the large model impractical, which is not making good use of the large model.

In serious business scenarios, we hope to use the large model to assist people, not replace them. That is, people are the final gatekeepers. For example, the large model’s ability to read and understand long texts is stronger than that of humans, but we should not directly use its summaries for business decisions; instead, we should let people review them and make the final decisions.

There are two reasons for this, the first is the issue of accuracy. If we were doing a project in an ERP system before, answering what the average salary of this department was over the past ten months? Let it generate an SQL statement to execute, but it always has a probability of more than 5% to generate it wrong, and there is still a certain error rate even after multiple repetitions. Users don’t understand SQL, and they can’t tell when the large model writes the SQL wrong, so users can’t judge whether the generated query results are correct. Even a 1% error rate is intolerable, making it difficult to commercialize.

On the other hand, the capabilities of the large model are currently only at a junior level, not expert level. A senior executive at Huawei had a very interesting saying during a meeting with us: If you are a domain expert, you will find the large model very dumb; but if you are a novice in the field, you will find the large model very smart. We believe that the basic large model will definitely progress to expert level, but we can’t just wait for the progress of the basic large model.

We can treat the large model as a very fast but unreliable junior employee. We can let the large model do some junior work, such as writing some basic CRUD code, even faster than people. But if you let it design system architecture or do research to solve cutting-edge technical problems, that is unreliable. We also wouldn’t let junior employees do these things in the company. With the large model, it’s like having a large number of cheap and fast junior employees. How to make good use of these junior employees is a management issue.

My mentor introduced us to some management concepts during our first meeting when I started my PhD. At that time, I didn’t quite understand why management was necessary for research, but now I think my mentor was absolutely right. Nowadays, significant research projects are essentially team efforts, which necessitates management. With the advent of large models, our team has expanded to include some AI employees, whose reliability is not yet assured, making management even more crucial.

AutoGPT organizes these AI employees into a project using Drucker’s management methods, dividing the work to achieve the goals. However, the process of AutoGPT is still relatively rigid, often spinning its wheels in one place or walking into dead ends. If the mechanisms used for managing junior employees in companies and the processes from project initiation to delivery were integrated into AutoGPT, it could improve the performance of AI employees, and might even achieve what Sam Altman envisioned—a company with only one person.

Currently, useful AI Agents can be broadly categorized into two types: personal assistants and business intelligence.

Personal assistant AI Agents have been around for many years, such as Siri on smartphones and Xiaodu smart speakers. Recently, some smart speaker products have also integrated large models, but due to cost issues, they are not smart enough, have high voice response latency, and cannot interact with RPA, mobile apps, or smart home devices. However, these technical issues are ultimately solvable.